Privacy-Preserving AI Techniques for Edge Devices

Discover how federated learning and differential privacy keep user data secure on IoT devices while maintaining strong AI performance.

Written by

Adam Stewart

Key Points

- Keep sensitive data local with federated learning - no cloud uploads required

- Add smart noise to datasets while keeping your analytics accurate and useful

- Balance strong privacy with the limited resources of edge devices

- Build customer trust with clear, honest data handling practices

As edge devices like smart home gadgets, wearables, and IoT sensors become ubiquitous, protecting user privacy is crucial. This article explores techniques to safeguard personal data in edge AI systems:

Key Privacy Techniques

- Federated Learning: Trains AI models across devices without sharing local data, keeping data on devices to reduce breach risks.

- Differential Privacy: Adds random "noise" to data to hide individual records, protecting user privacy while allowing analysis.

- Secure Data Processing:

- Secure Multi-Party Computation: Allows joint computation without revealing individual records.

- Homomorphic Encryption: Enables computations on encrypted data without decrypting.

Challenges

| Challenge | Description |

|---|---|

| Limited Resources | Edge devices have limited computing power, memory, and battery life, making complex AI models difficult. |

| Privacy vs. Performance | Privacy techniques can reduce model performance, accuracy, and increase latency. |

| Lack of Standards | Clear guidelines and regulations are needed for widespread adoption of privacy-preserving AI. |

Future Research Areas

| Area | Description |

|---|---|

| Efficient Privacy Algorithms | Balancing privacy, performance, and computing efficiency. |

| Edge AI Architecture | Designing systems that prioritize privacy, security, and scalability. |

| Explainability and Transparency | Explaining AI decisions while preserving privacy. |

| User-Centric Design | Ensuring user privacy and trust in edge AI systems. |

Protecting user privacy is crucial as edge AI systems become more prevalent. Continued research into efficient, scalable, and user-centric privacy techniques is essential for building trustworthy edge AI applications.

Related video from YouTube

Privacy Risks in Edge AI

Data Collection and Use

Edge AI devices gather and use various types of data, including:

- Personal Information: Details that identify individuals, like names and contact info

- Location Data: Where the device is used and tracks movements

- Sensor Data: Information from cameras, microphones, and other sensors

This data helps improve AI models, enable real-time processing, and provide personalized experiences. However, collecting and using this data raises privacy concerns.

Potential Privacy Issues

Edge AI systems face privacy threats, such as:

- Data Breaches: Hackers exploiting vulnerabilities to access sensitive data

- Unauthorized Access: Malicious actors misusing AI systems for surveillance

- Decentralized Risks: Challenges ensuring privacy across the entire edge computing system

| Privacy Threat | Description |

|---|---|

| Data Breaches | Hackers exploiting vulnerabilities to access sensitive data |

| Unauthorized Access | Malicious actors misusing AI systems for surveillance |

| Decentralized Risks | Challenges ensuring privacy across the entire edge computing system |

Building User Trust

To build and maintain user trust in edge AI technologies, developers must:

- Inform Users: Explain what data is collected, how it's processed, and who has access

- Implement Security: Use encryption, access controls, and other measures to protect data

- Be Transparent: Provide clear information about data practices

- Allow User Consent: Give users control over how their data is used

Addressing privacy concerns through transparency, accountability, and user consent is essential for the responsible development of edge AI systems.

Federated Learning

What is Federated Learning?

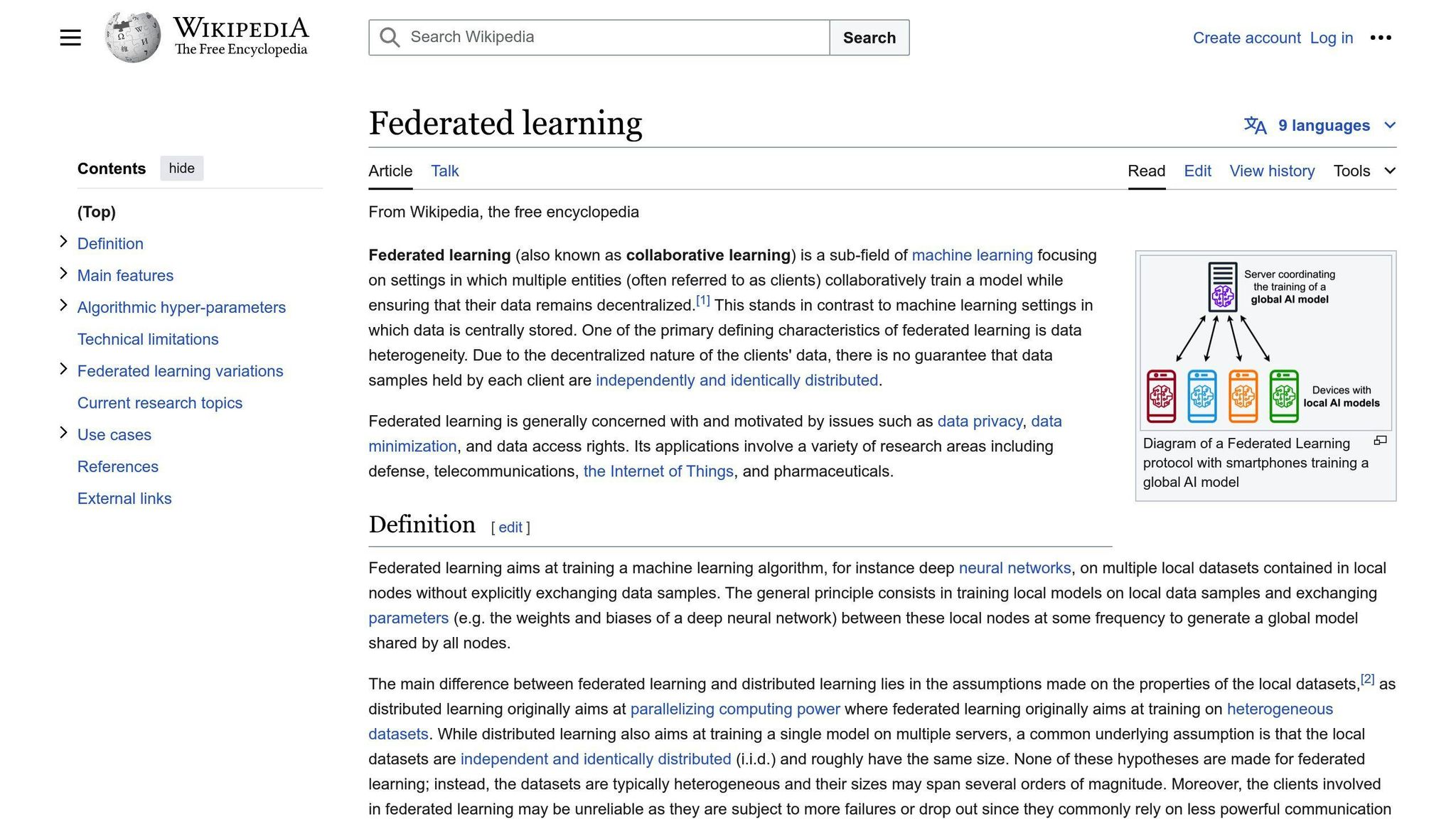

Federated Learning is a way to train AI models across many devices without sharing their local data. Instead of sending data to a central server, the devices calculate model updates locally and only share those updates with the server. This keeps the data on the devices, reducing the risk of data breaches and unauthorized access.

Benefits for Privacy

Federated Learning offers these privacy benefits:

- Data stays local: By keeping data on devices, Federated Learning lowers the chances of data breaches and unauthorized access.

- Improved security: No need to send data to a central server, reducing hacking risks.

- User trust: Giving users control over their data and keeping it on their devices builds trust with AI developers.

Using on Edge Devices

To use Federated Learning on edge devices, consider the device's computing power and network connection. Edge devices have limited processing and memory, making complex machine learning tasks challenging. But advances in hardware and software now allow Federated Learning on edge devices, enabling real-time processing and reduced latency.

Challenges

While Federated Learning has benefits, it also has challenges:

| Challenge | Description |

|---|---|

| Computational load | Federated Learning can be computationally intensive, needing significant processing power and memory. |

| Network differences | Edge devices may have varying network connectivity, affecting Federated Learning performance. |

| Data quality | The quality of training data can greatly impact the model's accuracy. |

Despite these challenges, Federated Learning has the potential to change how AI models are trained and used, enabling privacy-preserving AI applications that can help individuals and society.

Differential Privacy

What is Differential Privacy?

Differential privacy is a way to protect individual data privacy. It ensures that the results of an analysis do not reveal too much information about any one person's data, even if an attacker has access to the results and some additional information.

The key idea is to make it difficult for an attacker to determine whether an individual's data was included in the dataset or not. This is done by adding random "noise" or randomness to the data, masking individual records.

How Does It Work?

There are a few techniques used to achieve differential privacy:

- Noise Addition: Adding random noise to the data to hide individual records.

- Sensitivity Calibration: Adjusting the amount of noise based on how sensitive the data is.

- Privacy Budget: Setting a limit on how much privacy loss is acceptable for each analysis or query.

Using Differential Privacy in Edge AI

Differential privacy is especially useful for edge AI systems that process and analyze data in real-time. By adding noise to the data, edge devices can protect individual records, even if the data is sent to a central server or cloud.

Edge AI systems can use differential privacy to:

| Benefit | Description |

|---|---|

| Protect User Data | Ensure user data is protected from unauthorized access or inference |

| Comply with Regulations | Meet data protection laws like GDPR and CCPA |

| Build Trust | Provide users a robust guarantee of privacy protection |

Pros and Cons

| Pros | Cons |

|---|---|

| Enhances data privacy | May reduce model accuracy |

| Reduces data breach risks | Requires careful noise management |

| Supports regulatory compliance | Can increase computational needs |

sbb-itb-93482ea

Secure Data Processing

Secure data processing is crucial for protecting user privacy in edge AI systems. This section covers two techniques that enhance privacy: secure multi-party computation and homomorphic encryption.

Secure Multi-Party Computation

Secure multi-party computation (SMC) allows multiple parties to jointly compute their inputs without revealing individual records. This technique is useful for edge AI systems that need to process and analyze data from multiple sources in real-time.

With SMC, each party's data remains private, even when computations are performed jointly. For example, in a healthcare application, SMC can analyze patient data from multiple hospitals without revealing individual patient records. This enables creating accurate models and insights while maintaining patient privacy.

Homomorphic Encryption

Homomorphic encryption enables computations on encrypted data without decrypting it first. This allows edge AI systems to process and analyze encrypted data in real-time, without compromising user privacy.

Homomorphic encryption is useful when data needs to be processed in the cloud or on a remote server. By encrypting the data before sending it, edge AI systems can ensure user data remains private, even if the cloud infrastructure is compromised.

| Technique | Description |

|---|---|

| Secure Multi-Party Computation | Allows joint computation without revealing individual records |

| Homomorphic Encryption | Enables computations on encrypted data without decrypting |

Strengths and Limitations

| Strengths | Limitations |

|---|---|

| Provides strong privacy guarantees | Can be computationally intensive |

| Enables secure data processing | May require specialized hardware/software |

| Supports compliance with regulations | Implementation complexity |

Real-World Applications

Secure data processing techniques like SMC and homomorphic encryption have various real-world applications in edge AI systems, including:

- Healthcare: Analyze patient data while maintaining privacy

- Finance: Process financial transactions securely

- IoT: Analyze sensor data from multiple devices without compromising user privacy

Privacy-Preserving Frameworks

Available Options

Several frameworks help protect privacy in edge AI systems. Some popular choices include:

- Differential Privacy Framework: Tools for adding random "noise" to data to hide individual records.

- Federated Learning Framework: Allows multiple parties to jointly train AI models without sharing raw data.

- Homomorphic Encryption Framework: Enables computations on encrypted data without decrypting it first.

Key Features

These frameworks share features to safeguard privacy:

- Data Encryption: Encrypting data to prevent unauthorized access.

- Anonymization: Removing identifiable information from data.

- Secure Multi-Party Computation: Allowing joint computations without revealing individual records.

- Differential Privacy: Ensuring outputs don't reveal too much about individuals.

Framework Comparison

| Framework | Ease of Use | Efficiency | Privacy Protection |

|---|---|---|---|

| Differential Privacy | High | Medium | Strong |

| Federated Learning | Medium | High | Moderate |

| Homomorphic Encryption | Low | Low | Very Strong |

Suitable Use Cases

Different frameworks suit different needs:

- Healthcare: Differential Privacy Framework protects patient data privacy.

- Finance: Federated Learning Framework enables secure joint model training.

- IoT: Homomorphic Encryption Framework allows processing encrypted sensor data.

Challenges and Future Directions

Limited Resources

Edge devices like smart home gadgets, wearables, and IoT sensors have small amounts of computing power, memory, and battery life. This makes it hard to use complex AI models. There are trade-offs between model accuracy, processing speed, and energy use. For example, edge devices may struggle with large model parameters, needing solutions like model pruning, knowledge distillation, or federated learning to reduce the workload.

Privacy vs. Performance Balance

Keeping privacy in edge AI systems often reduces model performance. Techniques like differential privacy, homomorphic encryption, and secure multi-party computation can slow things down, lowering model accuracy or increasing latency. Developers must balance privacy protection and model performance, considering the specific use case and needs.

Standards and Regulations Needed

There is a lack of standards for privacy-preserving AI techniques and regulations. This can cause confusion and slow down adoption. Governments and industry groups must set clear guidelines and rules to ensure edge AI systems prioritize privacy and security. Standardization efforts, like developing privacy-preserving frameworks and APIs, can help integrate privacy techniques into edge AI systems.

Future Research Areas

Several areas need more research to overcome challenges in using privacy-preserving AI on edge devices:

- Efficient privacy algorithms: Developing algorithms that balance privacy, model performance, and computing efficiency.

- Edge AI architecture: Designing edge AI systems that prioritize privacy, security, and scalability.

- Explainability and transparency: Developing techniques to explain AI decisions while maintaining privacy.

- User-centric design: Incorporating user-focused design to ensure edge AI systems prioritize user privacy and trust.

| Research Area | Description |

|---|---|

| Efficient Privacy Algorithms | Balancing privacy, performance, and efficiency |

| Edge AI Architecture | Prioritizing privacy, security, and scalability |

| Explainability and Transparency | Explaining AI decisions while preserving privacy |

| User-Centric Design | Ensuring user privacy and trust in edge AI systems |

Conclusion

Key Privacy Techniques Summary

This article covered three main techniques to protect privacy in edge AI systems:

1. Federated Learning

- Trains AI models across devices without sharing local data

- Devices calculate model updates locally and share only those updates

- Keeps data on devices, reducing breach and access risks

2. Differential Privacy

- Adds random "noise" to data to hide individual records

- Makes it difficult to determine if an individual's data was included

- Protects user data privacy while allowing analysis

3. Secure Data Processing

- Secure Multi-Party Computation: Allows joint computation without revealing individual records

- Homomorphic Encryption: Enables computations on encrypted data without decrypting

Continued Research Importance

As edge AI systems become more common, research into privacy techniques must continue. Future work should focus on:

- Improving efficiency and scalability

- Addressing new privacy challenges

- Making techniques easier to use and implement

| Research Area | Goal |

|---|---|

| Efficient Algorithms | Balance privacy, performance, and computing needs |

| Edge AI Architecture | Prioritize privacy, security, and scalability |

| Explainability | Explain AI decisions while preserving privacy |

| User-Centric Design | Ensure user privacy and trust in edge AI |

Final Thoughts

Privacy techniques are crucial for trustworthy edge AI systems. They allow processing data privately, protecting user information while providing valuable insights. As edge AI evolves, prioritizing privacy and security is essential. Systems must be designed with user trust and protection in mind.

Summarize with AI

Related Posts

Federated Edge AI: The Complete 2025 Guide to Privacy-Preserving Distributed Intelligence

Explore the benefits of Federated Learning and Edge AI in preserving privacy while leveraging AI power. Learn about real-world applications and privacy protection strategies.

Differential Privacy for Edge AI Security

Learn how differential privacy enhances security in edge AI systems by protecting sensitive data and ensuring privacy. Explore the benefits, challenges, and future research directions.

Federated Learning for Privacy-Preserving Edge Computing

Explore federated learning for privacy-preserving edge computing, decentralized training, privacy protection, collaborative learning, and regulatory compliance. Learn how to set up the environment, prepare data, initialize the model, train local models, aggregate model updates, update the global model, and evaluate and monitor the system.

Privacy Preserving AI Techniques: Complete 2025 Guide

Explore privacy-preserving AI techniques, benefits, core methods, and popular frameworks. Learn how to safeguard sensitive data while utilizing AI's benefits.